The US Pentagon used the artificial intelligence model Claude, from the company Anthropic, in the operation carried out in Venezuela on January 3, which culminated in the capture of the now former Venezuelan dictator Nicolás Maduro.

According to information from the Wall Street Journalciting government sources, the Claude model was used in the operation through the company Palantir Technologies, whose data analysis platform is widely used by the United States Department of War and other federal security agencies. Palantir partners with Anthropic to enable the use of AI technology in constrained environments, integrating Claude with tools already used in the US military and defense system.

O portal americano Axios reported that two US government sources confirmed the use of the AI model during the operation in Venezuela. The portal also said that the model was not only used in the preparation phase of the operation, but also on the day of execution. The vehicle highlighted, however, that it was not possible to confirm the exact role played by the AI tool in capturing the Venezuelan dictator. The sources heard by the portal mention that the history of military use of the Claude by the US includes analysis of satellite images and intelligence processing.

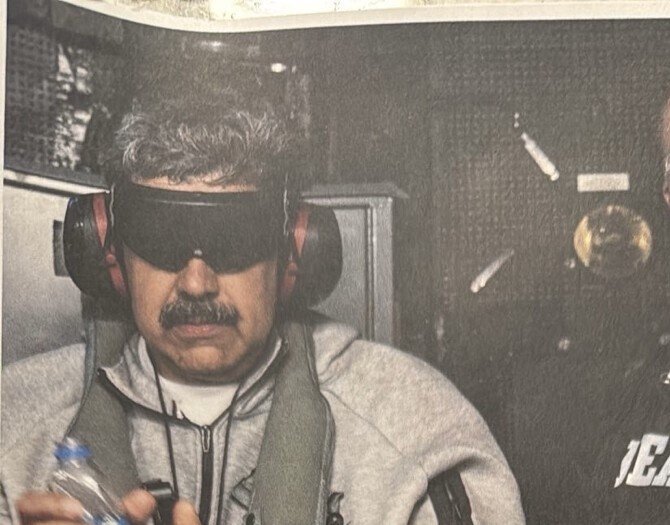

In the January 3 operation, Maduro and his wife, Cilia Flores, were captured and taken to the United States to face federal charges of narcoterrorism, conspiracy and drug trafficking. The American press cited that only seven US soldiers were injured in the operation.

When contacted by the US press, Anthropic said it could not comment on whether its AI tool had been used in the operation in Caracas.

“We cannot comment on whether Claude, or any other AI model, was used in any specific operation, classified or unclassified.” The company added that “any use of Claude – whether in the private sector or government – must comply with our Usage Policies, which govern how Claude can be deployed. We work closely with our partners to ensure compliance.”

The agency Reuters reported that Anthropic currently maintains policies that prohibit the use of Claude to support violence, weapons development or surveillance. Still, the company is currently the only developer of large language models with access to confidential government systems through partner companies.